This is a self-bootstrapped project completed in 07/2016 and the aim of this project is to design an interactive modeling tool for the schematic design phase in the architectural design process. The object recognition component of this project was implemented with RealSense R200 with C++. The modeling and simulation process was done in Rhino with Grasshopper. The projects is collaborated with Yuling Kong and Changyu Gao.

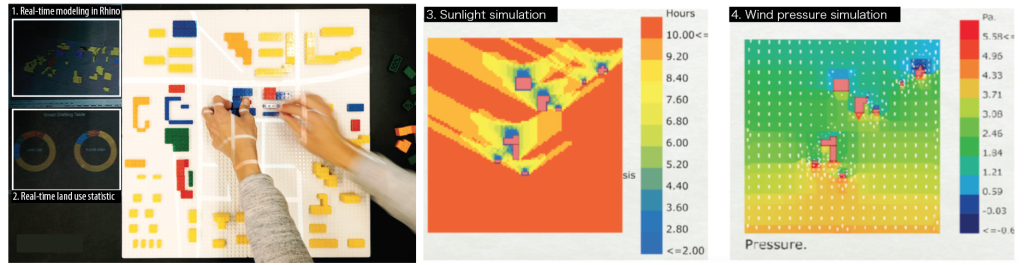

One of the pain points of the design process is that it is difficult to see the performance in the ideation process. All kinds of simulation softwares require different file formats, different computing platforms, and consume a lot of computing time, which delays the ideation process of the schematic design. Smart Modeling Table aims to establish a physical and virtual connection with the help of computer vision technologies. The physical model built by LEGO bricks could be accurately converted to digital model in real time and the designer can activate a variety of simulation algorithms including sunlight analysis, wind simulation, etc. to evaluate the performance of the model.

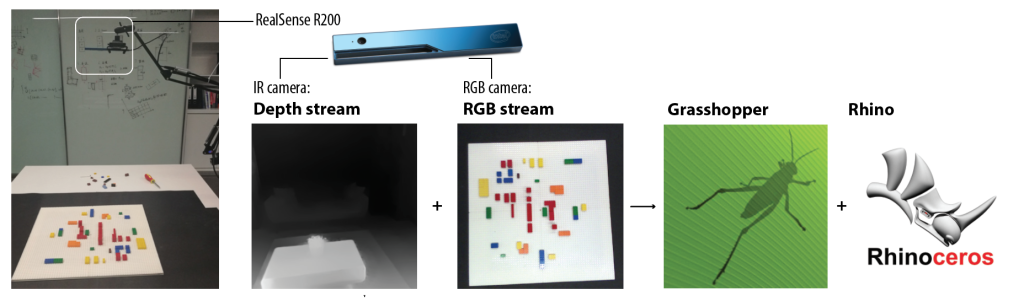

Intel’s realsense R200 depth camera is mainly used in the scanning the position and height of the LEGO bricks. The camera has both infrared and RGB cameras, which can simultaneously obtain the depth and color information of objects. The depth and color information are processed and fused into three-dimensional modeling information in Rhino. The simulation algorithms in the Grasshopper are activated when LeapMotion camera recognizes the defined hand gesture.

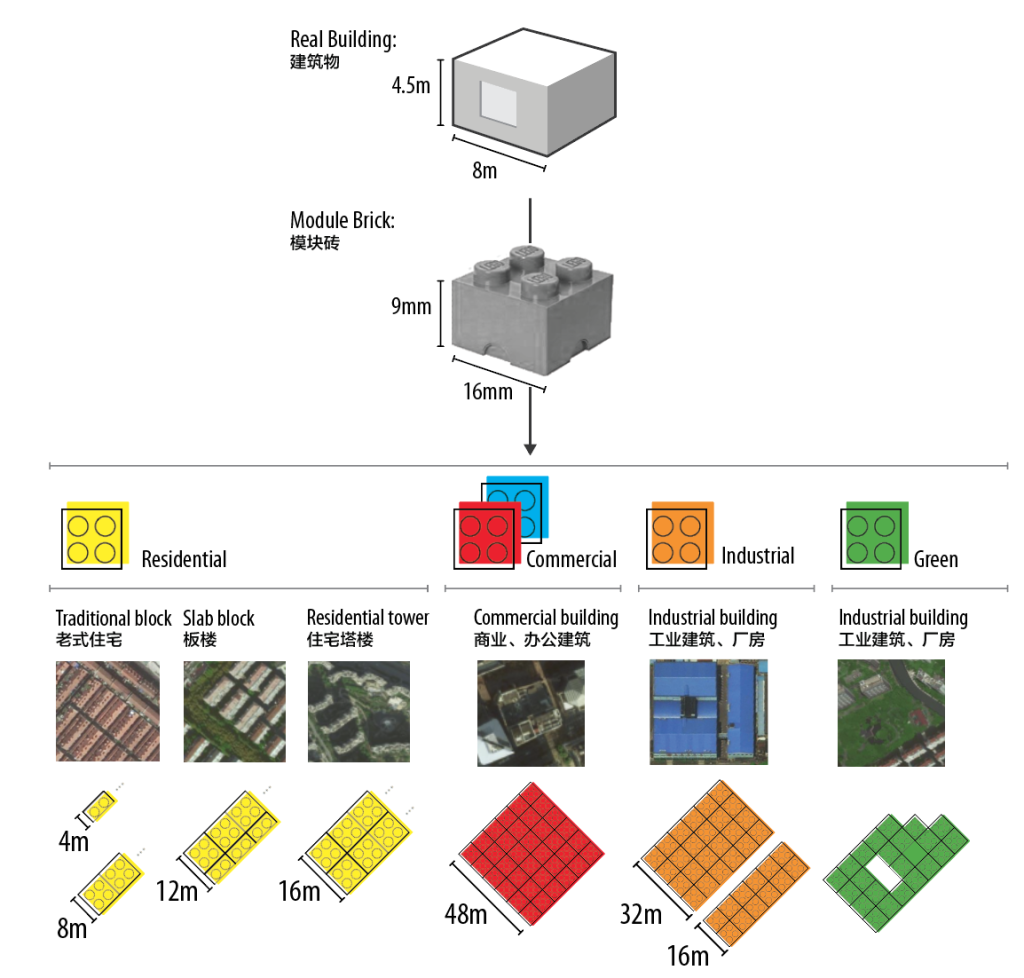

We use LEGO to represent the volume of buildings in the urban environment in a ratio of 1:500. A 9mm high and 16mm wide 2*2 LEGO block represents a 4.5m high and 8m wide building. Based on this principle, the figure blow shows the corresponding LEGO brick representation of typical buildings in the city.

The LEGO recognition process mainly includes the following 5 steps:

- Match the images of two cameras, and then find four vertices through color contrast.

- Connect the four boundaries based on the four vertices.

- Calibrate the perspective, and transform it into a standard square from the top view.

- Adjusted the curve of the image to make the color more distinct.

- The color and height information of bricks are converted into three-dimensional model information and stored in files for Grasshopper to read and model in Rhino.

There are two parts of analysis based on the digital model. The first part is the volume analysis of different building functions, which helps designers reasonably optimize the function composition in the site. The second part is to simulate the sunshine and wind environment of the model using the Ladybug and Butterfly plug-ins in grasshopper. During the design process, the simulation can be triggered by hand gestures, and the design can be adjusted according to the simulation results. The designer could optimize the model in time according to the real-time analysis, and ultimately get a more ideal design.

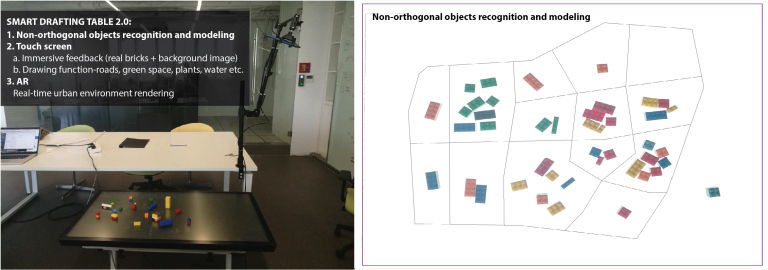

Smart Modeling table1.0 is just a quick prototype of an interactive modeling interface for designers. In the future, we will further carry out more robust real-time analysis functions and modeling capabilities (non-regular objects). We will replace the background board with a touch-screen display, which can not only expand the drawing function of basic urban elements, such as roads and water systems, but also interact with the simulation feedback results. In addition to the function enhancement of the design itself, we also consider integrating the AR rendering system, which enable designrs view the real scene after the design through mobile phones, tablets and other devices.

We hope that one day with the popularization of computer vision and simulation algorithms, this system can be run on cellphones or tablets. Smart Modeling Table will make the design process more joyful and rational.